Why does deep learning learn using only one activation function?

Wouldn't better performance be obtained if I mix several types of activation functions?

While studying while listening to deep learning lectures, the above question came to me.

In the case of humans,

multiple activation functions are mobilized in the learning process to perform complex learning.

The question is why deep learning learns using only one activation function,

Today we will look at the answer to this question.

Table of contents

1. The role of the activation function

2. Does the type of activation function matter?

3. The decisive reason to use only one type of activation function

4. Summary of summary

1. The role of the activation function

The above figure is a figure that can easily see the mathematical calculations performed in a neural network (= neural network) model.

Please listen while referring to the picture above!

To understand why only one type of activation function is used,

First you need to know what the activation function does.

Because you need to know what it does, so you can understand why you're learning using only one type.

The role of the activation function is

It filters the input values for each neural network layer and plays a role in adjusting the output value,

Because it is a "non linear function",

it plays an important role in making it meaningful to stack up neural networks by thousands.

A summary of the role of the activation function:

1. By giving "non-linearity" to the neural network layer, it gives the meaning of stacking neural networks on top of each other.

2. Controls the learning rate by adjusting the upper limit of the number of counted numbers

Now that we know what the activation function does, let’s see whether the type of activation function is important.

If the shape according to the type of activation function is a factor that greatly affects learning,

Because it seems fine to use multiple activation functions in combination.

2. Does the type of activation function matter?

You know that activation functions are important, but does the type of activation function really matter?

Currently, deep learning is not a great algorithm or intelligent system, just a huge mathematical function.

In the human body, the “stimulus threshold”, which serves as an activation function, serves as a complex neurological mechanism, whereas

The “activation function” in deep learning that trains a machine is

It is not possible to just help with mathematical calculations and limit the range of numbers.

In other words,

Although the shape and shape of the activation function have significant meaning for humans performing biological roles,

For a machine that performs deep learning, it doesn't matter which activation function it is,

It's just "non-linearity" and "limiting the range of numbers" that matter.

------------------------->

Of course, it is important to determine the appropriate activation function for the learning situation.

But that's because of learning time or computational efficiency,

It's not that the activation function is performing a higher-order computation, or that it's causally related to the data.

However, the mere fact that the form of a function is unimportant does not clear the question.

Next, let's find out the decisive reason for using only one type.

3. The decisive reason to use only one type of activation function

When humans learn, several activation functions are combined to learn, but

For deep learning machines, the activation function has only mathematical meaning.

In other words,

The use of multiple activation functions does not change the learning mechanism,

Rather, only the calculation is complicated,

And the learning is not performed smoothly due to the change of numeric values that vary depending on the function.

For example,

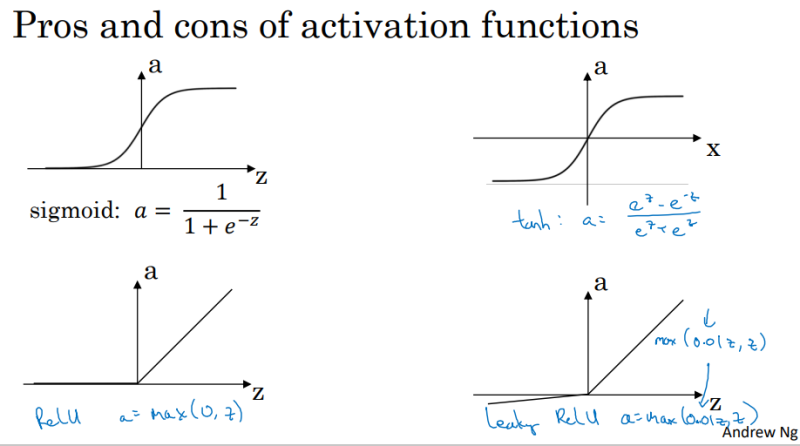

As shown below, let's say you want to do deep learning using both Relu and Tanh functions.

The output value of the Relu function is input as the input value of the Tanh function,

Again, the output value of the Tanh function is input as the input value of the Relu function.

Every time you pass through the layers,

the type of function changes, causing the problem that the values change greatly.

Also,

Gradient descent, which uses the derivative values of the backpropagation process to update parameters, will also not work properly.

As a result,

Learning efficiency is lower than when using one type of activation function,

The computational process becomes much more complex, and the learning performance deteriorates.

4. Reasons for using only one activation function - Summary:

1. Activation functions play an important role within deep learning.

2. However, unlike humans and learning mechanisms, the difference in shape according to the type of function does not have a significant effect on learning.

(The main reason for using Relu is that it can shorten the learning time because of its fast calculation.)

3. In addition, if multiple types are used, the calculation process becomes more complicated, the learning process is not performed smoothly, and the performance of the deep learning model is rather deteriorated.

'인공지능 정보' 카테고리의 다른 글

| "딥러닝"의 본질 (Nature of Deep learning) (0) | 2022.07.02 |

|---|---|

| 죽음을 두려워한다는 인공지능? - 대중을 기만하는 잘못된 오해 바로잡기 (0) | 2022.06.22 |

| [도서 추천] 딥러닝의 기본부터 최신 개념까지 쉽게 설명해놓은 책 (1) | 2022.06.18 |

| 함수(fuction) 와 1차 함수(linear fuction) (0) | 2022.06.01 |

| [딥러닝] 활성화 함수를 1가지 종류만 사용해서 학습하는 이유 (0) | 2022.05.20 |

| 머신 러닝(Machine Learning)의 3가지 학습 방식 (0) | 2022.04.17 |

| 딥러닝과 머신러닝의 차이점 구별하기 (0) | 2022.04.15 |